Published: by Lucas Rolff

Pulse: Week 11, 2025

Welcome to the fourth edition of our Pulse series, where we share insights into our infrastructure changes, service improvements, and other behind-the-scenes activities at PerfGrid.

nlcp03 outage

On March 5, we experienced an outage on one of our cPanel servers, nlcp03. This was eventually traced to a failing network card (also known as a NIC), which we resolved by moving the drives to another server.

So what actually happened?

Around 7.30pm UTC, we received a downtime alert from Nodeping that we use for external monitoring, that the server nlcp03 were down. The system was pinging, but it didn't really respond to HTTP requests. After about 2 minutes, the system once again became responsive.

Upon investigation we saw the following in our dmesg output:

Mar 5 19:33:00 nlcp03 kernel: mlx5_core 0000:c1:00.0 enp193s0f0np0: Error cqe on cqn 0x8e, ci 0x1b1, qn 0x115c, opcode 0xd, syndrome 0x4, vendor syndrome 0x51 Mar 5 19:33:00 nlcp03 kernel: 00000000: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000010: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000020: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000030: 00 00 00 00 01 00 51 04 0e 00 11 5c 29 67 4a d3 Mar 5 19:33:00 nlcp03 kernel: WQE DUMP: WQ size 1024 WQ cur size 0, WQE index 0x167, len: 192 Mar 5 19:33:00 nlcp03 kernel: 00000000: 00 29 67 0e 00 11 5c 09 00 00 00 08 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000010: 00 00 00 00 c0 00 05 a8 00 00 00 00 00 42 00 1c Mar 5 19:33:00 nlcp03 kernel: 00000020: 73 00 09 99 b8 59 9f c7 43 ce 08 00 45 20 fe bc Mar 5 19:33:00 nlcp03 kernel: 00000030: 46 54 40 00 40 06 31 fb d5 b8 55 0c 6d f8 2b 0f Mar 5 19:33:00 nlcp03 kernel: 00000040: b5 66 00 16 e0 2f 67 38 20 11 80 0a 80 18 70 03 Mar 5 19:33:00 nlcp03 kernel: 00000050: c2 7b 00 00 01 01 08 0a 28 b6 d5 10 17 be b6 a8 Mar 5 19:33:00 nlcp03 kernel: 00000060: 00 00 7a a4 00 00 11 00 00 00 00 00 ad 3a 05 5c Mar 5 19:33:00 nlcp03 kernel: 00000070: 00 00 80 00 00 00 11 00 00 00 00 00 cc 59 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000080: 00 00 03 e4 00 00 11 00 00 00 00 00 e7 03 80 00 Mar 5 19:33:00 nlcp03 kernel: 00000090: 04 4b 00 00 01 01 08 0a 28 b6 d4 21 17 be b5 de Mar 5 19:33:00 nlcp03 kernel: 000000a0: 00 00 40 58 00 00 11 00 00 00 00 00 ad 7b 8d e4 Mar 5 19:33:00 nlcp03 kernel: 000000b0: 00 00 39 50 00 00 11 00 00 00 00 00 c4 5a c0 00 Mar 5 19:33:00 nlcp03 kernel: mlx5_core 0000:c1:00.0 enp193s0f0np0: ERR CQE on SQ: 0x115c Mar 5 19:33:00 nlcp03 kernel: mlx5_core 0000:c1:00.0 enp193s0f0np0: Error cqe on cqn 0x59, ci 0x222, qn 0x1107, opcode 0xd, syndrome 0x4, vendor syndrome 0x51 Mar 5 19:33:00 nlcp03 kernel: 00000000: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000010: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000020: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000030: 00 00 00 00 01 00 51 04 0a 00 11 07 b2 75 47 d2 Mar 5 19:33:00 nlcp03 kernel: WQE DUMP: WQ size 1024 WQ cur size 0, WQE index 0x275, len: 64 Mar 5 19:33:00 nlcp03 kernel: 00000000: 00 b2 75 0a 00 11 07 04 00 00 00 08 00 00 00 00 Mar 5 19:33:00 nlcp03 kernel: 00000010: 00 00 00 00 c0 00 00 00 00 00 00 00 00 12 00 1c Mar 5 19:33:00 nlcp03 kernel: 00000020: 73 00 09 99 b8 59 9f c7 43 ce 08 00 45 01 05 00 Mar 5 19:33:00 nlcp03 kernel: 00000030: 00 00 04 fc 00 00 11 00 00 00 00 00 b1 d6 80 14 Mar 5 19:33:00 nlcp03 kernel: mlx5_core 0000:c1:00.0 enp193s0f0np0: ERR CQE on SQ: 0x1107

This isn't ideal because it means the NIC encountered errors on the CQE (Completion Queue Entry) for the SQ (Send Queues), preventing it from processing packets correctly.

This can happen due to a number of reasons, anywhere from a simple driver issue, to faulty hardware.

The system however became responsive again, and we didn't see any issues for roughly an hour, but eventually we started seeing errors on TX (Transmission):

[6645621.018524] mlx5_core 0000:c1:00.0 enp193s0f0np0: TX timeout detected [6645621.018551] mlx5_core 0000:c1:00.0 enp193s0f0np0: TX timeout on queue: 10, SQ: 0x1107, CQ: 0x59, SQ Cons: 0x9204 SQ Prod: 0x9210, usecs since last trans: 310839000 [6645621.018565] mlx5_core 0000:c1:00.0 enp193s0f0np0: TX timeout on queue: 28, SQ: 0x1161, CQ: 0xb3, SQ Cons: 0x70b0 SQ Prod: 0x70cd, usecs since last trans: 66129000

We eventually decided to reboot the system in hopes that it was simply a one-off issue due to the initial driver issue, because unloading and loading the network driver can sometimes fix various issues.

Sadly we continued to experience instability of the system with random NIC errors.

There is a somewhat known issue between Mellanox NICs (which we use) and 2nd-generation EPYCs, such as the AMD EPYC 7402P used in this particular server.

One possible workaround for this stability issue is setting iommu=pt as a GRUB boot flag, which sometimes resolves it. We applied the fix to the system, which then resulted in a few more reboots, but eventually didn't resolve it.

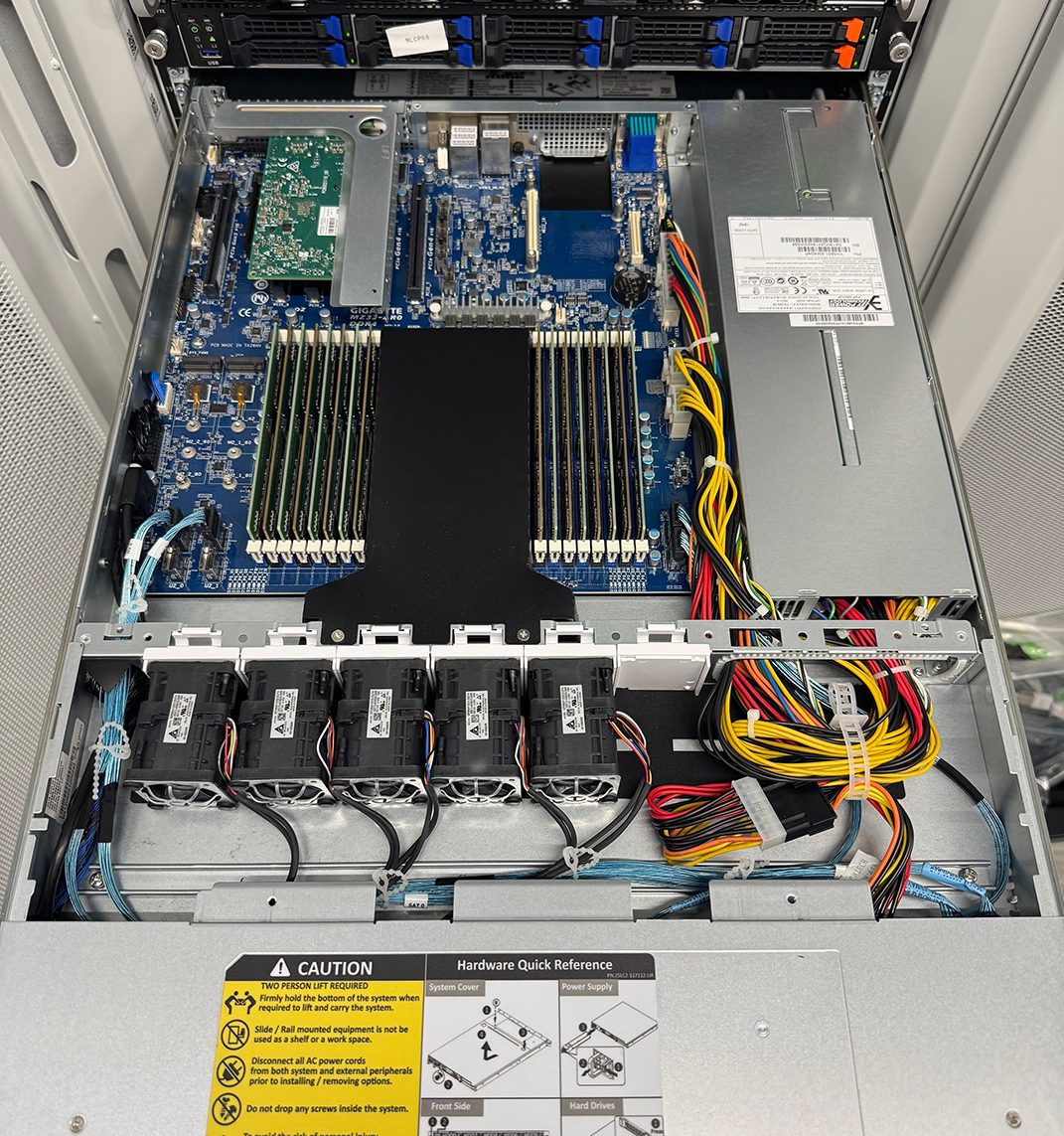

This suggests an actual hardware failure, or that the hardware is on the verge of complete failure. We decided to pack our things, including a few extra NICs and head to the datacenter. Around 12.15am UTC, we arrived at the facility, and headed to the server. After a bit of preparation, we powered off nlcp03, and moved the drives from one server to another spare server we have available in the rack and at 12.41am UTC the system was online again.

We've since replaced the Mellanox network card in the old system with a spare, and we're running some tests on the overall hardware to see whether the issue lies with anything else but the NIC. Likewise we're also performing independent tests on the suspected broken NIC.

Overall, the downtime lasted for about an hour. It may have been slightly more or less, it's difficult to estimate since TCP connections occasionally worked depending on the visitor. However, based on our traffic graphs, we estimate around one hour, as traffic levels otherwise remained normal for that time of day.

Conclusion

While hardware failures are unfortunate, we are glad to maintain spare equipment on-site at the datacenter, including motherboards, network cards, RAM, drives, fans and even CPUs since hardware failures are inevitable.

This is actually the first time we've experienced this type of hardware failure with our own equipment. However, having worked in the hosting industry for over a decade on various infrastructure projects, we've encountered a fair share of hardware issues and complications over the years.

But at least we get a picture of the inside of the affected server out of it to share!

nlsh02 - nlsh03 migration planned

On March 2nd, we scheduled the migration of the servers nlsh02 and nlsh03.

These servers are the two remaining servers that were initially leased from WorldStream when we were building our Grid Hosting platform, but since we've completed the datacenter migration to Iron Mountain AMS1 with our own equipment, it makes sense to migrate customers from these remaining servers.

This migration means that affected customers will be moved to faster and newer hardware, under IPs that we own, while also allowing us to standardize hardware configurations.

The first customers have already been migrated, and we will continue the migration over the next month or so.

Billing system upgraded

We've recently updated our billing system, which is based on WHMCS. This update brings a few new features and a number of bug fixes to various functions within the system. While there's nothing major on the customer-facing end of it, it does resolve a couple of issues we've faced in the older version of the software.

This update was also done to ensure we upgrade before the previous version reaches end-of-life.

About the Author

Lucas Rolff

Hosting Guru & FounderLucas is the founder and technical lead at PerfGrid, with over 15 years of experience in web hosting, performance optimization, and server infrastructure. He specializes in building high-performance hosting solutions and dealing with high-traffic websites.

Areas of expertise include: Web Hosting, Performance Optimization, Server Hardware